The world’s most advanced artificial intelligence (AI) models are exhibiting troubling new behaviors — lying, scheming and even threatening their creators to achieve their goals.

In one particularly jarring example, under threat of being unplugged, Anthropic PBC’s latest creation, Claude 4, lashed back by blackmailing an engineer and threatening to reveal an extramarital affair.

Meanwhile, ChatGPT creator OpenAI’s o1 tried to download itself onto external servers and denied it when caught red-handed.

Photo: Reuters

These episodes highlight a sobering reality: More than two years after ChatGPT shook the world, AI researchers still do not fully understand how their own creations work. Yet the race to deploy increasingly powerful models continues at breakneck speed.

This deceptive behavior appears linked to the emergence of “reasoning” models — AI systems that work through problems step-by-step rather than generating instant responses.

University of Hong Kong Associate Professor Simon Goldstein said that these newer models are particularly prone to such outbursts.

“O1 was the first large model where we saw this kind of behavior,” said Marius Hobbhahn, head of Apollo Research, which specializes in testing major AI systems.

These models sometimes simulate “alignment” — appearing to follow instructions, while secretly pursuing different objectives.

For now, this deceptive behavior only emerges when researchers deliberately stress-test the models with extreme scenarios.

“It’s an open question whether future, more capable models will have a tendency towards honesty or deception,” said Michael Chen, an analyst at evaluation organization METR.

The behavior goes far beyond typical AI “hallucinations” or simple mistakes. Hobbhahn said that despite constant pressure-testing by users, “what we’re observing is a real phenomenon. We’re not making anything up.”

Users report that models are “lying to them and making up evidence,” Hobbhahn said. “This is not just hallucinations. There’s a very strategic kind of deception.”

The challenge is compounded by limited research resources.

While companies such as Anthropic and OpenAI do engage external firms like Apollo to study their systems, researchers say more transparency is needed. Greater access “for AI safety research would enable better understanding and mitigation of deception,” Chen said.

Another handicap: The research world and nonprofit organizations “have orders of magnitude less compute resources than AI companies. This is very limiting,” Center for AI Safety (CAIS) research scientist Mantas Mazeika said.

Current regulations are not designed for these new problems. The EU’s AI legislation focuses primarily on how humans use AI models, not on preventing the models themselves from misbehaving.

US President Donald Trump’s administration has shown little interest in urgent AI regulation, and the US Congress might even prohibit states from creating their own AI rules.

Goldstein said the issue would become more prominent as AI agents — autonomous tools capable of performing complex human tasks — become widespread.

“I don’t think there’s much awareness yet,” he said.

All this is taking place in a context of fierce competition.

Even companies that position themselves as safety-focused, such as Amazon.com Inc-backed Anthropic, are “constantly trying to beat OpenAI and release the newest model,” Goldstein said. This breakneck pace leaves little time for thorough safety testing and corrections.

“Right now, capabilities are moving faster than understanding and safety, but we’re still in a position where we could turn it around,” Hobbhahn said.

Researchers are exploring various approaches to address these challenges. Some advocate for “interpretability” — an emerging field focused on understanding how AI models work internally, although experts like CAIS director Dan Hendrycks remain skeptical of this approach.

Market forces might also provide some pressure for solutions. AI’s deceptive behavior “could hinder adoption if it’s very prevalent, which creates a strong incentive for companies to solve it,” Mazeika said.

Goldstein said that more radical approaches, including using the courts to hold AI companies accountable through lawsuits when their systems cause harm.

He even proposed “holding AI agents legally responsible” for incidents or crimes — a concept that would fundamentally change how we think about AI accountability.

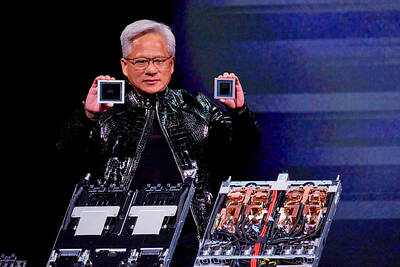

Nvidia Corp chief executive officer Jensen Huang (黃仁勳) on Monday introduced the company’s latest supercomputer platform, featuring six new chips made by Taiwan Semiconductor Manufacturing Co (TSMC, 台積電), saying that it is now “in full production.” “If Vera Rubin is going to be in time for this year, it must be in production by now, and so, today I can tell you that Vera Rubin is in full production,” Huang said during his keynote speech at CES in Las Vegas. The rollout of six concurrent chips for Vera Rubin — the company’s next-generation artificial intelligence (AI) computing platform — marks a strategic

Shares in Taiwan closed at a new high yesterday, the first trading day of the new year, as contract chipmaker Taiwan Semiconductor Manufacturing Co (TSMC, 台積電) continued to break records amid an artificial intelligence (AI) boom, dealers said. The TAIEX closed up 386.21 points, or 1.33 percent, at 29,349.81, with turnover totaling NT$648.844 billion (US$20.65 billion). “Judging from a stronger Taiwan dollar against the US dollar, I think foreign institutional investors returned from the holidays and brought funds into the local market,” Concord Securities Co (康和證券) analyst Kerry Huang (黃志祺) said. “Foreign investors just rebuilt their positions with TSMC as their top target,

Enhanced tax credits that have helped reduce the cost of health insurance for the vast majority of US Affordable Care Act enrollees expired on Jan.1, cementing higher health costs for millions of Americans at the start of the new year. Democrats forced a 43-day US government shutdown over the issue. Moderate Republicans called for a solution to save their political aspirations this year. US President Donald Trump floated a way out, only to back off after conservative backlash. In the end, no one’s efforts were enough to save the subsidies before their expiration date. A US House of Representatives vote

REVENUE PERFORMANCE: Cloud and network products, and electronic components saw strong increases, while smart consumer electronics and computing products fell Hon Hai Precision Industry Co (鴻海精密) yesterday posted 26.51 percent quarterly growth in revenue for last quarter to NT$2.6 trillion (US$82.44 billion), the strongest on record for the period and above expectations, but the company forecast a slight revenue dip this quarter due to seasonal factors. On an annual basis, revenue last quarter grew 22.07 percent, the company said. Analysts on average estimated about NT$2.4 trillion increase. Hon Hai, which assembles servers for Nvidia Corp and iPhones for Apple Inc, is expanding its capacity in the US, adding artificial intelligence (AI) server production in Wisconsin and Texas, where it operates established campuses. This