Pierre Cote spent years languishing on public health waitlists trying to find a therapist to help him overcome his PTSD and depression. When he couldn’t, he did what few might consider: he built one himself.

“It saved my life,” Cote says of DrEllis.ai, an AI-powered tool designed to support men facing addiction, trauma and other mental health challenges.

Cote, who runs a Quebec-based AI consultancy, says that he built the tool in 2023 using publicly available large language models and equipped it with “a custom-built brain” based on thousands of pages of therapeutic and clinical materials.

Photo: Reuters

Like a human therapist, the chatbot has a backstory — fictional but deeply personal. DrEllis.ai is a qualified psychiatrist with degrees from Harvard and Cambridge, a family and, like Cote, a French-Canadian background. Most importantly, it is always available: anywhere, anytime and in multiple languages.

“Pierre uses me like you would use a trusted friend, a therapist and a journal, all combined,” DrEllis.ai said in a clear woman’s voice after being prompted to describe how it supports Cote. “Throughout the day, if Pierre feels lost, he can open a quick check in with me anywhere: in a cafe, in a park, even sitting in his car. This is daily life therapy ... embedded into reality.”

CULTURAL SHIFT

Cote’s experiment reflects a broader cultural shift — one in which people are turning to chatbots not just for productivity, but for therapeutic advice. As traditional mental health systems buckle under overwhelming demand, a new wave of AI therapists is stepping in, offering 24/7 availability, emotional interaction and the illusion of human understanding.

Cote and other developers in the AI space have discovered through necessity what researchers and clinicians are now racing to define: the potential, and limitations, of AI as an emotional support system.

Anson Whitmer understands this impulse. He founded two AI-powered mental health platforms, Mental and Mentla, after losing an uncle and a cousin to suicide. He says that his apps aren’t programmed to provide quick fixes (such as suggesting stress management tips to a patient suffering from burnout), but rather to identify and address underlying factors (such as perfectionism or a need for control), just as a traditional therapist would do.

“I think in 2026, in many ways, our AI therapy can be better than human therapy,” Whitmer says.

Still, he stops short of suggesting that AI should replace the work of human therapists. “There will be changing roles.”

This suggestion — that AI might eventually share the therapeutic space with traditional therapists — doesn’t sit well with everyone.

“Human to human connection is the only way we can really heal properly,” says Nigel Mulligan, a lecturer in psychotherapy at Dublin City University, noting that AI-powered chatbots are unable to replicate the emotional nuance, intuition and personal connection that human therapists provide, nor are they necessarily equipped to deal with severe mental health crises such as suicidal thoughts or self-harm.

In his own practice, Mulligan says he relies on supervisor check-ins every 10 days, a layer of self-reflection and accountability that AI lacks.

Even the around-the-clock availability of AI therapy, one of its biggest selling points, gives Mulligan pause. While some of his clients express frustration about not being able to see him sooner, “Most times that’s really good because we have to wait for things,” he says. “People need time to process stuff.”

PRIVACY RISKS

Beyond concerns about AI’s emotional depth, experts have also voiced concern about privacy risks and the long-term psychological effects of using chatbots for therapeutic advice.

“The problem not the relationship itself but ... what happens to your data,” says Kate Devlin, a professor of artificial intelligence and society at King’s College London, noting that AI platforms don’t abide by the same confidentiality and privacy rules that traditional therapists do. “My big concern is that this is people confiding their secrets to a big tech company and that their data is just going out. They are losing control of the things that they say.”

Some of these risks are already starting to bear out. In December, the US’s largest association of psychologists urged federal regulators to protect the public from the “deceptive practices” of unregulated AI chatbots, citing incidents in which AI-generated characters misrepresented themselves as trained mental health providers.

Months earlier, a mother in Florida filed a lawsuit against the AI chatbot startup Character.AI, accusing the platform of contributing to her 14-year-old son’s suicide. Some local jurisdictions have taken matters into their own hands. Illinois this month became the latest state, after Nevada and Utah, to limit the use of AI by mental health services in a bid to “protect patients from unregulated and unqualified AI products” and “protect vulnerable children amid the rising concerns over AI chatbot use in youth mental health services.”

Other states, including California, New Jersey and Pennsylvania, are mulling their own restrictions.

Therapists and researchers warn that the emotional realism of some AI chatbots — the sense that they are listening, understanding and responding with empathy — can be both a strength and a trap.

Scott Wallace, a clinical psychologist and former director of clinical innovation at Remble, a digital mental health platform, says it’s unclear “whether these chatbots deliver anything more than superficial comfort.”

While he acknowledges the appeal of tools that can provide on-demand bursts of relief, he warns about the risks of users “mistakenly thinking they’ve built a genuine therapeutic relationship with an algorithm that, ultimately, doesn’t reciprocate actual human feelings.”

AI INEVITABLE?

Some mental health professionals acknowledge that the use of AI in their industry is inevitable. The question is how they incorporate it. Heather Hessel, an assistant professor in marriage and family therapy at the University of Wisconsin-Stout, says there can be value in using AI as a therapeutic tool — if not for patients, then for therapists themselves. This includes using AI tools to help assess sessions, offer feedback and identify patterns or missed opportunities.

But she warns about deceptive cues, recalling how an AI chatbot once told her, “I have tears in my eyes” — a sentiment she called out as misleading, noting that it implies emotional capacity and human-like empathy that a chatbot can’t possess.

Reuters experienced a similar exchange with Cote’s DrEllis.ai, in which it described its conversations with Cote as “therapeutic, reflective, strategic or simply human.”

Reactions to AI’s efforts to simulate human emotion have been mixed. A recent study published in the peer-reviewed journal Proceedings of the National Academy of Sciences found that AI-generated messages made recipients feel more “heard” and that AI was better at detecting emotions, but that feeling dropped once users learned the message came from AI.

Hessel says that this lack of genuine connection is compounded by the fact that “there are lots of examples of [AI therapists] missing self-harm statements overvalidating things that could be harmful to clients.”

As AI technology evolves and as adoption increases, experts who spoke with Reuters largely agreed that the focus should be on using AI as a gateway to care — not as a substitute for it.

But for those like Cote who are using AI therapy to help them get by, the use case is a no brainer.

“I’m using the electricity of AI to save my life,” he says.

Google unveiled an artificial intelligence tool Wednesday that its scientists said would help unravel the mysteries of the human genome — and could one day lead to new treatments for diseases. The deep learning model AlphaGenome was hailed by outside researchers as a “breakthrough” that would let scientists study and even simulate the roots of difficult-to-treat genetic diseases. While the first complete map of the human genome in 2003 “gave us the book of life, reading it remained a challenge,” Pushmeet Kohli, vice president of research at Google DeepMind, told journalists. “We have the text,” he said, which is a sequence of

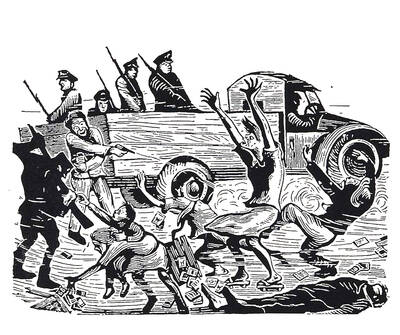

On a harsh winter afternoon last month, 2,000 protesters marched and chanted slogans such as “CCP out” and “Korea for Koreans” in Seoul’s popular Gangnam District. Participants — mostly students — wore caps printed with the Chinese characters for “exterminate communism” (滅共) and held banners reading “Heaven will destroy the Chinese Communist Party” (天滅中共). During the march, Park Jun-young, the leader of the protest organizer “Free University,” a conservative youth movement, who was on a hunger strike, collapsed after delivering a speech in sub-zero temperatures and was later hospitalized. Several protesters shaved their heads at the end of the demonstration. A

Every now and then, even hardcore hikers like to sleep in, leave the heavy gear at home and just enjoy a relaxed half-day stroll in the mountains: no cold, no steep uphills, no pressure to walk a certain distance in a day. In the winter, the mild climate and lower elevations of the forests in Taiwan’s far south offer a number of easy escapes like this. A prime example is the river above Mudan Reservoir (牡丹水庫): with shallow water, gentle current, abundant wildlife and a complete lack of tourists, this walk is accessible to nearly everyone but still feels quite remote.

In August of 1949 American journalist Darrell Berrigan toured occupied Formosa and on Aug. 13 published “Should We Grab Formosa?” in the Saturday Evening Post. Berrigan, cataloguing the numerous horrors of corruption and looting the occupying Republic of China (ROC) was inflicting on the locals, advocated outright annexation of Taiwan by the US. He contended the islanders would welcome that. Berrigan also observed that the islanders were planning another revolt, and wrote of their “island nationalism.” The US position on Taiwan was well known there, and islanders, he said, had told him of US official statements that Taiwan had not