In the pre-dawn hours, Ann Li’s anxieties felt overwhelming. She’d recently been diagnosed with a serious health problem, and she just wanted to talk to someone about it. But she hadn’t told her family, and all her friends were asleep. So instead, she turned to ChatGPT.

“It’s easier to talk to AI during those nights,” said the 30-year-old Taiwanese woman.

In China, Yang, a 25-year-old Guangdong resident, had never seen a mental health professional when she started talking to an AI chatbot earlier this year. Yang says it was difficult to access mental health services, and she couldn’t contemplate confiding in family or friends.

Photo: Reuters

“Telling the truth to real people feels impossible,” she says.

But she was soon talking to the chatbot “day and night.”

Li and Yang are among a growing number of Chinese-speaking people turning to generative AI chatbots instead of professional human therapists. Experts say there is huge potential for AI in the mental health sector, but are concerned about the risks of people in distress turning to the technology, rather than human beings, for medical assistance.

There are few official statistics, but mental health professionals in Taiwan and China have reported rising rates of patients consulting AI before seeing them, or instead of seeing them. Surveys, including a global analysis recently published by Harvard Business Review, show psychological assistance is now a leading reason for adults to use AI chatbots. On social media there are hundreds of thousands of posts praising AI for helping them.

It comes amid rising rates of mental illness in Taiwan and China, particularly among younger people. Access to services is not keeping apace — appointments are hard to get, and they’re expensive. Chatbot users say AI saves them time and money, gives real answers and is more discrete in a society where there is still stigma around mental health.

“In some way the chatbot does help us — it’s accessible, especially when ethnic Chinese tend to suppress or downplay our feelings,” says Dr Yi-Hsien Su, a clinical psychologist at True Colors in Taiwan, who also works in schools and hospitals to promote mental wellbeing in Taiwan.

“I talk to people from Gen Z and they’re more willing to talk about problems and difficulties … But there’s still much to do.”

In Taiwan, the most popular chatbot is ChatGPT. In China, where western apps like ChatGPT are banned, people have turned to domestic offerings like Baidu’s Ernie Bot, or the recently launched DeepSeek. They are all advancing at rapid speed, and are incorporating wellbeing and therapy into responses as demand increases.

User experiences vary. Li says ChatGPT gives her what she wants to hear, but that can also be predictable and uninsightful. She also misses the process of self discovery in counseling.

“I think AI tends to give you the answer, the conclusion that you would get after you finish maybe two or three sessions of therapy,” she says.

Yet 27-year-old Nabi Liu, a Taiwanese woman based in London, has found the experience to be very fulfilling.

“When you share something with a friend, they might not always relate. But ChatGPT responds seriously and immediately,” she says. “I feel like it’s genuinely responding to me each time.”

Experts say it can assist people who are in distress but perhaps don’t need professional help yet, like Li, or those who need a little encouragement to take the next step.

Yang says she doubted whether her struggles were serious enough to warrant professional help.

“Only recently have I begun to realize that I might actually need a proper diagnosis at a hospital,” she says.

“Going from being able to talk [to AI] to being able to talk to real people might sound simple and basic, but for the person I was before, it was unimaginable.”

But experts have also raised concerns about people falling through the cracks, missing the signs that Yang saw for herself, and not getting the help they need.

There have been tragic cases in recent years of young people in distress seeking help from chatbots instead of professionals, and later taking their own lives.

“AI mostly deals with text, but there are things we call non verbal input. When a patient comes in maybe they act differently to how they speak but we can recognize those inputs,” Su says.

A spokesperson for the Taiwan Counseling Psychology Association says AI can be an “auxiliary tool,” but couldn’t replace professional assistance “let alone the intervention and treatment of psychologists in crisis situations.”

“AI has the potential to become an important resource for promoting the popularization of mental health. However, the complexity and interpersonal depth of the clinical scene still require the real ‘present’ psychological professional.”

The association says AI can be “overly positive,” miss cues and delay necessary medical care. It also operates outside the peer review and ethics codes of the profession.

“In the long run, unless AI develops breakthrough technologies beyond current imagination, the core structure of psychotherapy should not be shaken.”

Su says he’s excited about the ways AI could modernize and improve his industry, noting potential uses in training of professionals and detecting people online who might need intervention. But for now he recommends people approach the tools with caution.

“It’s a simulation, it’s a good tool, but has limits and you don’t know how the answer was made,” he says.

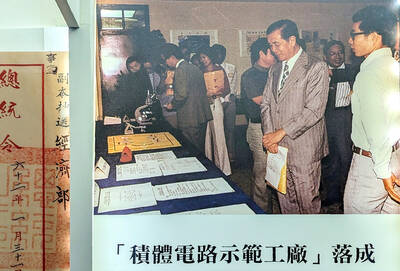

Oct. 27 to Nov. 2 Over a breakfast of soymilk and fried dough costing less than NT$400, seven officials and engineers agreed on a NT$400 million plan — unaware that it would mark the beginning of Taiwan’s semiconductor empire. It was a cold February morning in 1974. Gathered at the unassuming shop were Economics minister Sun Yun-hsuan (孫運璿), director-general of Transportation and Communications Kao Yu-shu (高玉樹), Industrial Technology Research Institute (ITRI) president Wang Chao-chen (王兆振), Telecommunications Laboratories director Kang Pao-huang (康寶煌), Executive Yuan secretary-general Fei Hua (費驊), director-general of Telecommunications Fang Hsien-chi (方賢齊) and Radio Corporation of America (RCA) Laboratories director Pan

The consensus on the Chinese Nationalist Party (KMT) chair race is that Cheng Li-wun (鄭麗文) ran a populist, ideological back-to-basics campaign and soundly defeated former Taipei mayor Hau Lung-bin (郝龍斌), the candidate backed by the big institutional players. Cheng tapped into a wave of popular enthusiasm within the KMT, while the institutional players’ get-out-the-vote abilities fell flat, suggesting their power has weakened significantly. Yet, a closer look at the race paints a more complicated picture, raising questions about some analysts’ conclusions, including my own. TURNOUT Here is a surprising statistic: Turnout was 130,678, or 39.46 percent of the 331,145 eligible party

The classic warmth of a good old-fashioned izakaya beckons you in, all cozy nooks and dark wood finishes, as tables order a third round and waiters sling tapas-sized bites and assorted — sometimes unidentifiable — skewered meats. But there’s a romantic hush about this Ximending (西門町) hotspot, with cocktails savored, plating elegant and never rushed and daters and diners lit by candlelight and chandelier. Each chair is mismatched and the assorted tables appear to be the fanciest picks from a nearby flea market. A naked sewing mannequin stands in a dimly lit corner, adorned with antique mirrors and draped foliage

The election of Cheng Li-wun (鄭麗文) as chair of the Chinese Nationalist Party (KMT) marked a triumphant return of pride in the “Chinese” in the party name. Cheng wants Taiwanese to be proud to call themselves Chinese again. The unambiguous winner was a return to the KMT ideology that formed in the early 2000s under then chairman Lien Chan (連戰) and president Ma Ying-jeou (馬英九) put into practice as far as he could, until ultimately thwarted by hundreds of thousands of protestors thronging the streets in what became known as the Sunflower movement in 2014. Cheng is an unambiguous Chinese ethnonationalist,