This week Meta Platforms announced the elimination of its fact-checking program in the US along with rollbacks to content moderation policies on “hateful conduct.” The measures would undoubtedly open the floodgates to more hateful, harassing and inciting content on Facebook and Instagram. Immigrants and LGBTQ+ communities are two of the groups most likely to be affected.

Last month, after US president-elect Donald Trump won the election, Zuckerberg visited the incoming leader at Mar-a-Lago, and then Meta sent US$1 million to his inauguration fund. When asked for comment about the company’s policy changes, Trump said that Zuckerberg was “probably” influenced by his threats to imprison the tech CEO.

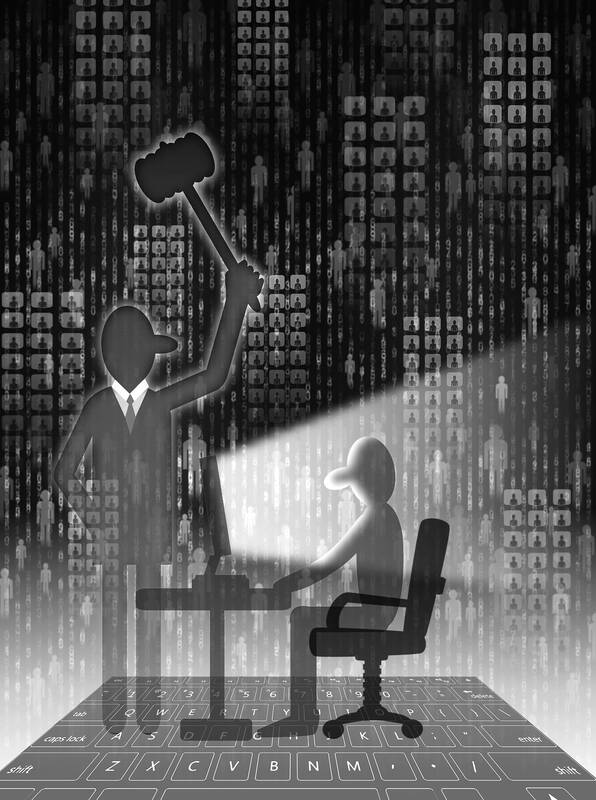

This is the making of a mafia state, where open threats are met with lavish gifts and public praise.

Illustration: Yusha

Looking back on the history of content moderation, it is easy to conclude that social media companies tailor their products to suit the needs of those with the power to regulate them. This time is no different, except the consequences for vulnerable groups would probably be worse.

By changing Meta’s policy on fact-checking to appease Trump, Zuckerberg is laying the foundation for friction-free oligarchy, where those with the most power and influence no longer have to contend with facts or corrections.

It was during the first Trump administration that tech companies realized social media was susceptible to foreign and domestic media manipulation campaigns, as their products were used to reach millions with lies, grift, conspiracy and hate. Journalists uncovered large-scale media manipulation campaigns run by Cambridge Analytica and Russia’s Internet Research Agency, which weaponized Facebook for political ends during the 2016 US election and Brexit.

Instead of taking responsibility and aggressively rooting out abusers, Zuckerberg turned to his advisers, a well-known cadre of fixers who cut their teeth in politics. Most were educated at Harvard and well-seasoned in political doublespeak, but governing speech globally became the challenge of their lifetimes.

Reacting to increasing public criticism of “fake news” on Facebook in November 2016, Zuckerberg posted a lengthy message about misinformation to his own profile, stating that the social media company had reached out to “respected fact-checking organizations” and was moving methodically to avoid becoming “arbiters of truth.” By December, Adam Mosseri, then the company’s vice president of News Feed, described new protocols for reporting false stories, which shifted the responsibility for content moderation on to third-party fact-checkers who had signed on to the nonprofit media organization Poynter Institute for Media Studies’ international fact-checking code of principles.

Despite the efforts, misinformation continued to flourish, especially among right-leaning audiences.

In 2018, the company’s then-chief operating officer, Sheryl Sandberg — a former chief of staff for the US secretary of the treasury before leaving for Google — championed an “oversight board,” nicknamed the “supreme court” of Facebook, to adjudicate and review controversial moderation decisions.

In early 2021, former UK deputy prime minister and then-vice president of Meta’s global affairs and communications Nick Clegg wrote the decision to ban Trump indefinitely after Trump used the company’s products to promote an attack on the Capitol. Zuckerberg at the time said that “the risks of allowing the president to continue to use our service” were “simply too great.”

Meta’s content arbitration system was costly and clunky, but on the upside, it compelled some transparency about content moderation decisions and provided conclusive evidence that misinformation is a feature, not a bug, of the right-wing media ecosystem.

Now, Clegg, who was in 2022 promoted to president of global affairs, is being replaced by Joel Kaplan, a former senior staffer to former US president George W. Bush and participant in the 2000 Brooks Brothers riot.

After Meta’s Jan. 3 announcement that Clegg was stepping down, Kaplan went on Fox News to share his enthusiasm for the policy changes, lavishing praise on Trump as he did so. His influence on the new direction of Meta is obvious and troubling for Internet freedom advocates, who do not want social media platforms to continue to be pawns in a political chess match.

In a talking point that could have been taken from Trump himself, Zuckerberg said that “the fact-checkers have just been too politically biased and have destroyed more trust than they’ve created, especially in the US.”

Crucially, academic research on fact-checking reveals the opposite of Zuckerberg’s claims. A study by researchers at the Massachusetts Institute of Technology’s Sloan School of Management shows that exposure to fact-checks reduces belief in misinformation, even among right-wing audiences who doubt the efficacy of fact-checking.

Meta has never applied its rules evenly to all users. The whistle-blower Frances Haugen showed that Meta maintained a high-profile list of accounts that were repeatedly allowed to break platform rules. Meta historically excluded politicians from fact-check eligibility, and ending the fact-checking program is likely to be a windfall mostly to right-wing users of Meta products, who are more likely to share misinformation on Facebook, according to research conducted by academics in partnership with Meta and published in Science.

The lack of fact-checks would probably lead to the aggressive spread of conspiracy theories and hateful content on Meta products, which would put advertisers and brand safety to the test.

In place of trained fact-checkers who are experts at detecting, documenting and debunking misinformation, Meta plans to deploy a rogue’s gallery of users to police speech on the platform, adopting the same system of “community notes” as Elon Musk’s X.

However, moderation on X is also going poorly. After facing a rapidly diminishing user base and advertiser boycotts, X is valued at 20 percent of what Musk paid for it.

A clear example of how moderation on the platform reflects the whims and preferences of Musk emerged on Jan. 4, when Musk was being admonished by die-hard “Make America Great Again” (MAGA) supporters over the issue of H-1B visas for foreign workers. Musk’s response was to optimize the platform for “unregretted user-seconds” by focusing on more entertaining content, while also banning and demonetizing a number of MAGA stalwarts.

In the past, Musk has decried “deplatforming” and demonetization as a censorship tactic solely employed by the left. Now that argument cannot stand as Mr “Dark MAGA” himself plays the algorithms, with Zuckerberg following along.

Instead of blaming fact-checkers, Zuckerberg should just admit he is changing the rules to reflect Trump’s political agenda and would tune the algorithms so that Trump can build a base on Facebook and Instagram, after Musk paved the way on X.

“It’s time to get back to our roots around free expression,” Zuckerberg said.

(That is not Meta’s origin story. Facebook’s predecessor invited Harvard University students to rate the physical attractiveness of female classmates, which Zuckerberg tries to memory-hole every chance he gets.)

Free expression refers to the human right to “seek, receive and impart information,” the UN’s Universal Declaration of Human Rights states. It does not guarantee an audience or amplification of that speech. Moreover, it does not protect against fact-checking or labeling online speech. That is a power held only by the corporations who control the flow of content across platforms.

Far from enabling free expression, Meta’s changes to the “hateful conduct” policy signal a return to Facebook’s more misogynistic roots. In a blog post, Meta pledged to align moderation policies with “mainstream discourse” on gender and immigration specifically, two issues championed by Trump and Musk during last year’s presidential campaign. It is now OK to refer to LGBTQ+ people as mentally ill and to denigrate immigrants on Meta products.

It is a telltale sign of technofascism when communication systems are disrupted by changes in political power after every election. Protection for vulnerable groups online continues to depend on the political ambitions of the CEO or owner of social media platforms.

That is further proof that social media is not a free speech machine. It never was. Instead, content moderation is the core product of social media, where algorithms decide if speech is visible, at what volume, and if there would be counterspeech. Contrary to Zuckerberg’s claims, it was not the fact-checkers who ruined Meta products. It has always been insider political operatives, including Clegg, Sandberg and Kaplan, who turned social media into a new frontier for the culture wars.

Joan Donovan is an assistant professor of journalism and emerging media studies at Boston University. She is the founder of the Critical Internet Studies Institute.

Taiwan is rapidly accelerating toward becoming a “super-aged society” — moving at one of the fastest rates globally — with the proportion of elderly people in the population sharply rising. While the demographic shift of “fewer births than deaths” is no longer an anomaly, the nation’s legal framework and social customs appear stuck in the last century. Without adjustments, incidents like last month’s viral kicking incident on the Taipei MRT involving a 73-year-old woman would continue to proliferate, sowing seeds of generational distrust and conflict. The Senior Citizens Welfare Act (老人福利法), originally enacted in 1980 and revised multiple times, positions older

Taiwan’s business-friendly environment and science parks designed to foster technology industries are the key elements of the nation’s winning chip formula, inspiring the US and other countries to try to replicate it. Representatives from US business groups — such as the Greater Phoenix Economic Council, and the Arizona-Taiwan Trade and Investment Office — in July visited the Hsinchu Science Park (新竹科學園區), home to Taiwan Semiconductor Manufacturing Co’s (TSMC) headquarters and its first fab. They showed great interest in creating similar science parks, with aims to build an extensive semiconductor chain suitable for the US, with chip designing, packaging and manufacturing. The

The Chinese Nationalist Party (KMT) has its chairperson election tomorrow. Although the party has long positioned itself as “China friendly,” the election is overshadowed by “an overwhelming wave of Chinese intervention.” The six candidates vying for the chair are former Taipei mayor Hau Lung-bin (郝龍斌), former lawmaker Cheng Li-wen (鄭麗文), Legislator Luo Chih-chiang (羅智強), Sun Yat-sen School president Chang Ya-chung (張亞中), former National Assembly representative Tsai Chih-hong (蔡志弘) and former Changhua County comissioner Zhuo Bo-yuan (卓伯源). While Cheng and Hau are front-runners in different surveys, Hau has complained of an online defamation campaign against him coming from accounts with foreign IP addresses,

When Taiwan High Speed Rail Corp (THSRC) announced the implementation of a new “quiet carriage” policy across all train cars on Sept. 22, I — a classroom teacher who frequently takes the high-speed rail — was filled with anticipation. The days of passengers videoconferencing as if there were no one else on the train, playing videos at full volume or speaking loudly without regard for others finally seemed numbered. However, this battle for silence was lost after less than one month. Faced with emotional guilt from infants and anxious parents, THSRC caved and retreated. However, official high-speed rail data have long