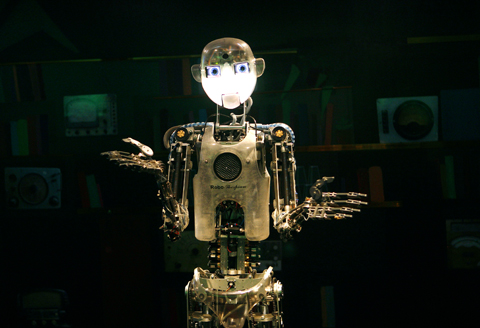

The boy, a dark-haired

6-year-old, is playing with a new companion.

PHOTO: REUTERS

The two hit it off quickly — unusual for the 6-year-old, who has autism — and the boy is imitating his playmate’s every move, now nodding his head, now raising his arms.

PHOTO: BLOOMBERG

“Like Simon Says,” says the autistic boy’s mother, seated next to him on the floor.

Yet soon he begins to withdraw; in a video of the session, he covers his ears and slumps against the wall.

PHOTO: BLOOMBERG

But the companion, a 91cm-tall robot being tested at the University of Southern California, maintains eye contact and performs another move, raising one arm up high.

Up goes the boy’s arm — and now he is smiling at the machine.

In a handful of laboratories around the world, computer scientists are developing robots like this one: highly programmed machines that can engage people and teach them simple skills, including household tasks, vocabulary or, as in the case of the boy, playing, elementary imitation and taking turns.

So far, the teaching has been very basic, delivered mostly in experimental settings, and the robots are still works in progress, a hackers’ gallery of moving parts that, like mechanical savants, each do some things well at the expense of others.

Yet the most advanced models are fully autonomous, guided by artificial intelligence software like motion tracking and speech recognition, which can make them just engaging enough to rival humans at some teaching tasks.

Researchers say the pace of innovation is such that these machines should begin to learn as they teach, becoming the sort of infinitely patient, highly informed instructors that would be effective in subjects like foreign language or in repetitive therapies used to treat developmental problems like autism.

LESSONS FROM RUBI

“Kenka,” says a childlike voice. “Ken-ka.”

Standing on a polka-dot carpet at a preschool on the campus of the University of California, San Diego, a robot named RUBI is teaching Finnish to a 3-year-old boy.

RUBI looks like a desktop computer come to life: its screen-torso, mounted on a pair of shoes, sprouts mechanical arms and a lunchbox-sized head, fitted with video cameras, a microphone and voice capability. RUBI wears a bandanna around its neck and a fixed happy-face smile, below a pair of large, plastic eyes.

It picks up a white sneaker and says kenka, the Finnish word for shoe, before returning it to the floor. “Feel it; I’m a kenka.”

In a video of this exchange, the boy picks up the sneaker, says “kenka, kenka” — and holds up the shoe for the robot to see.

In the San Diego classroom where RUBI has taught Finnish, researchers are finding that the robot enables preschool children to score significantly better on tests, compared with less interactive learning, as from tapes.

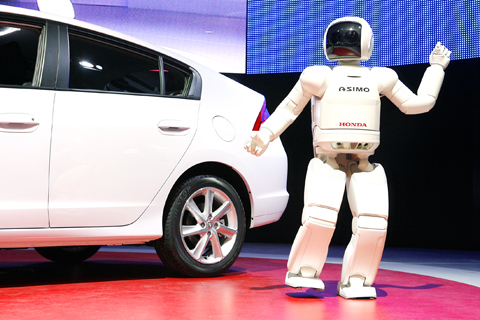

Researchers in social robotics — a branch of computer science devoted to enhancing communication between humans and machines — at Honda Labs in Mountain View, California, have found a similar result with their robot, a 91cm-tall character called Asimo, which looks like a miniature astronaut. In one 20-minute session the machine taught grade-school students how to set a table — improving their accuracy by about 25 percent, a recent study found.

MAKING THE CONNECTION

In a lab at the University of Washington, Morphy, a pint-sized robot, catches the eye of an infant girl and turns to look at a toy.

No luck; the girl does not follow its gaze, as she would a human’s.

In a video the researchers made of the experiment, the girl next sees the robot “waving” to an adult. Now she’s interested; the sight of the machine interacting registers it as a social being in the young brain. She begins to track what the robot is looking at, to the right, the left, down. The machine has elicited what scientists call gaze-following, an essential first step of social exchange.

“Before they have language, infants pay attention to what I call informational hot spots,” where their mother or father is looking, said Andrew Meltzoff, a psychologist who is co-director of university’s Institute for Learning and Brain Sciences. This, he said, is how learning begins.

This basic finding, to be published later this year, is one of dozens from a field called affective computing that is helping scientists discover exactly which features of a robot make it most convincingly “real” as a social partner, a helper, a teacher.

The San Diego researchers found that if RUBI reacted to a child’s expression or comment too fast, it threw off the interaction; the same happened if the response was too slow. But if the robot reacted within about a second and a half, child and machine were smoothly in sync.

One way to begin this process is to have a child mimic the physical movements of a robot and vice versa. In a continuing study financed by the National Institutes of Health, scientists at the University of Connecticut are conducting therapy sessions for children with autism using a French robot called Nao, a 61cm-tall humanoid that looks like an elegant Transformer toy. The robot, remotely controlled by a therapist, demonstrates martial arts kicks and chops and urges the child to follow suit; then it encourages the child to lead.

This simple mimicry seems to build a kind of trust, and increase sociability, said Anjana Bhat, an assistant professor in the department of education who is directing the experiment.

LEARNING FROM HUMANS

On a recent Monday afternoon, Crystal Chao, a graduate student in robotics at the Georgia Institute of Technology, was teaching a 1.5m-tall robot named Simon to put away toys. She had given some instructions — the flower goes in the red bin, the block in the blue bin — and Simon had correctly put away several of these objects. But now the robot was stumped, its doughboy head tipped forward, its fawn eyes blinking at a green toy water sprinkler.

Chao repeated her query.

“Let me see,” said Simon, in a childlike machine voice, reaching to pick up the sprinkler. “Can you tell me where this goes?”

“In the green bin,” came the answer.

Simon nodded, dropping it in that bin.

“Makes sense,” the robot said.

In addition to tracking

motion and recognizing language, Simon accumulates knowledge through experience.

Just as humans can learn from machines, machines can learn from humans, said Andrea Thomaz, an assistant professor of interactive computing at Georgia Tech who directs the project.

This ability to monitor and learn from experience is the next great frontier for social robotics — and it probably depends, in large part, on unraveling the secrets of how the human brain accumulates information during infancy.

In San Diego, researchers are trying to develop a human-looking robot with sensors that approximate the complexity of a year-old infant’s abilities to feel, see and hear. Babies learn, seemingly effortlessly, by experimenting, by mimicking, by moving their limbs. Could a machine with sufficient artificial intelligence do the same? And what kind of learning systems would be sufficient?

The research group has bought a US$70,000 robot, built by a Japanese company, that is controlled by a pneumatic pressure system that will act as its senses, in effect helping it map out the environment by “feeling” in addition to “seeing” with embedded cameras. And that is the easy part.

The researchers are shooting for nothing less than capturing the foundation of human learning — or, at least, its artificial intelligence equivalent. If robots can learn to learn, on their own and without instruction, they can in principle make the kind of teachers that are responsive to the needs of a class, even an individual child.

April 28 to May 4 During the Japanese colonial era, a city’s “first” high school typically served Japanese students, while Taiwanese attended the “second” high school. Only in Taichung was this reversed. That’s because when Taichung First High School opened its doors on May 1, 1915 to serve Taiwanese students who were previously barred from secondary education, it was the only high school in town. Former principal Hideo Azukisawa threatened to quit when the government in 1922 attempted to transfer the “first” designation to a new local high school for Japanese students, leading to this unusual situation. Prior to the Taichung First

The Ministry of Education last month proposed a nationwide ban on mobile devices in schools, aiming to curb concerns over student phone addiction. Under the revised regulation, which will take effect in August, teachers and schools will be required to collect mobile devices — including phones, laptops and wearables devices — for safekeeping during school hours, unless they are being used for educational purposes. For Chang Fong-ching (張鳳琴), the ban will have a positive impact. “It’s a good move,” says the professor in the department of

On April 17, Chinese Nationalist Party (KMT) Chairman Eric Chu (朱立倫) launched a bold campaign to revive and revitalize the KMT base by calling for an impromptu rally at the Taipei prosecutor’s offices to protest recent arrests of KMT recall campaigners over allegations of forgery and fraud involving signatures of dead voters. The protest had no time to apply for permits and was illegal, but that played into the sense of opposition grievance at alleged weaponization of the judiciary by the Democratic Progressive Party (DPP) to “annihilate” the opposition parties. Blamed for faltering recall campaigns and faced with a KMT chair

Article 2 of the Additional Articles of the Constitution of the Republic of China (中華民國憲法增修條文) stipulates that upon a vote of no confidence in the premier, the president can dissolve the legislature within 10 days. If the legislature is dissolved, a new legislative election must be held within 60 days, and the legislators’ terms will then be reckoned from that election. Two weeks ago Taipei Mayor Chiang Wan-an (蔣萬安) of the Chinese Nationalist Party (KMT) proposed that the legislature hold a vote of no confidence in the premier and dare the president to dissolve the legislature. The legislature is currently controlled