When a friend messaged voice actor Bev Standing to ask whether she was the voice of TikTok’s text-to-speech feature, the Canadian performer’s surprise soon turned to irritation.

She had never done work for the popular social media platform, yet the voice was unmistakably hers.

Standing concluded that speech recordings she had done for another client years earlier had somehow been obtained by TikTok, and fed into an algorithm which allows users to turn written text into a voiceover for video clips in the app.

Photo: AFP

She sued TikTok in a case that was settled in September — one that performing artists say was symptomatic of the growing challenges artificial intelligence (AI) poses to creatives.

“I am a business. I need to protect my product, and my product is my voice,” Standing said. “They [TikTok] weren’t my client. It’s like me buying a car and you driving it away. You didn’t buy it, you don’t get to drive it.”

From digitally resurrecting dead celebrities to improving lip sync for movies dubbed in foreign languages, AI has been increasingly deployed in the movie and audio industry in recent years, sparking debates around ethics and copyright issues.

A documentary on the late chef Anthony Bourdain faced a backlash after using AI to recreate his voice.

Working actors and other performing artists say they are also concerned about the impacts of AI on their livelihoods, with some calling for creators to be given more rights over their work and how it is used.

British actor and comedian Rick Kiesewetter signed away all rights to recordings of his voice and face movements in a job for a tech firm several years ago.

Now he feels somewhat uneasy about it.

“I just don’t know where this stuff is going to end up ... It could even end up in porn as far as I know,” he said.

LEGAL GAPS

Actors’ groups said artists risk losing work to AI, and that performers who lend their voice or acting skills to computers are often not fairly compensated.

While AI has created new employment opportunities, it has also become increasingly common for performers to have their image or voice used without permission, according to Equity, a British union representing workers from the performing arts.

It has also raised concerns that performers who have done work for AI projects do not fully understand their rights.

“Like any technology, there’s a lag to it,” said Kiesewetter, an Equity member, who said some agents were not yet up to speed on the intricacies of tech companies’ contracts.

Artists worldwide often have no real protection against AI-generated imitations such as deepfake videos, said Mathilde Pavis, a law lecturer at the University of Exeter in Britain, as laws have not kept up with tech developments.

Numerous countries, including Britain and France, have performers’ rights legislation, which typically allow artists to withhold access or get paid for recordings of their performance.

But the laws generally do not protect the creative content of performances, meaning imitations — such as those generated by AI — are allowed, said Pavis.

“We assumed that a performance could only be reproduced or bootlegged or stolen on scale if it’s recorded on something,” she said.

Both she and Equity have argued that new laws strengthening rights for creatives are needed.

Pavis said it would be better to give performers copyright over the content of their performances to hand them full control. Artists would then have to consent to performances being used, and could get a passive income stream, said Pavis.

AI AUTHORS?

Ryan Abbott, a professor of law and health science at the University of Surrey in Britain who has written a book on law and AI, says algorithms should have rights, too.

Computers are increasingly skilled at creating anything from music to stories, but their work lacks full protection in the US as only humans can be listed as authors, he said.

In a bid to change that, Abbott is bringing a legal case against the US Copyright Office to register a digital artwork made by a computer with AI as its author, and the AI owner as the copyright owner who ultimately benefits from any profits.

While machines do not need rights, lack of copyright protection leaves their owners and developers exposed, and risks stifling investment and innovation, he said.

Works made by AI should also be clearly identified to avoid devaluing the role of human artists, he added.

“You can have someone record my voice for an hour and train the AI to make music. And suddenly you can have an AI make award-winning music that sounded like it was coming from me,” he said.

“If we did that, I would say it would be unfair to have me listed as the author, because it would suggest I’m a great musician, when really, I’m absolutely terrible.”

As AI typically generates work after having “learnt” its craft from large amounts of human-made pieces, some say human artists should be compensated if their performance is used in the process.

On this and other issues, it will come down to what lawmakers decide is fair, said Abbott.

“Do we want to make it easier for businesses to do business, or ... for creatives to get compensation?” he said.

With recordings of work calls and video conversations having become commonplace during the pandemic, these decisions will have repercussions outside the entertainment industry in spheres such as workplace rights, said Pavis.

“Performers are a little bit the lab rats for what we think is acceptable and unacceptable, legal and illegal,” she said.

“They are at the frontier of the debate around what use of our data and our faces for work will be seen as appropriate.”

In late October of 1873 the government of Japan decided against sending a military expedition to Korea to force that nation to open trade relations. Across the government supporters of the expedition resigned immediately. The spectacle of revolt by disaffected samurai began to loom over Japanese politics. In January of 1874 disaffected samurai attacked a senior minister in Tokyo. A month later, a group of pro-Korea expedition and anti-foreign elements from Saga prefecture in Kyushu revolted, driven in part by high food prices stemming from poor harvests. Their leader, according to Edward Drea’s classic Japan’s Imperial Army, was a samurai

The following three paragraphs are just some of what the local Chinese-language press is reporting on breathlessly and following every twist and turn with the eagerness of a soap opera fan. For many English-language readers, it probably comes across as incomprehensibly opaque, so bear with me briefly dear reader: To the surprise of many, former pop singer and Democratic Progressive Party (DPP) ex-lawmaker Yu Tien (余天) of the Taiwan Normal Country Promotion Association (TNCPA) at the last minute dropped out of the running for committee chair of the DPP’s New Taipei City chapter, paving the way for DPP legislator Su

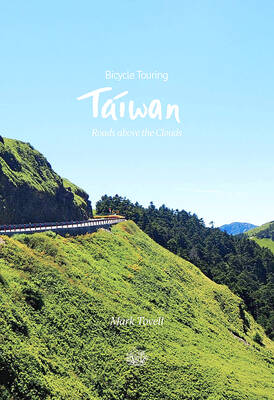

It’s hard to know where to begin with Mark Tovell’s Taiwan: Roads Above the Clouds. Having published a travelogue myself, as well as having contributed to several guidebooks, at first glance Tovell’s book appears to inhabit a middle ground — the kind of hard-to-sell nowheresville publishers detest. Leaf through the pages and you’ll find them suffuse with the purple prose best associated with travel literature: “When the sun is low on a warm, clear morning, and with the heat already rising, we stand at the riverside bike path leading south from Sanxia’s old cobble streets.” Hardly the stuff of your

Located down a sideroad in old Wanhua District (萬華區), Waley Art (水谷藝術) has an established reputation for curating some of the more provocative indie art exhibitions in Taipei. And this month is no exception. Beyond the innocuous facade of a shophouse, the full three stories of the gallery space (including the basement) have been taken over by photographs, installation videos and abstract images courtesy of two creatives who hail from the opposite ends of the earth, Taiwan’s Hsu Yi-ting (許懿婷) and Germany’s Benjamin Janzen. “In 2019, I had an art residency in Europe,” Hsu says. “I met Benjamin in the lobby