Amazon Web Services (AWS) on Tuesday launched its in-house-built Trainium3 artificial intelligence (AI) chip, marking a significant push to compete with Nvidia Corp in the lucrative market for AI computing power.

The move intensifies competition in the AI chip market, where Nvidia dominates with an estimated 80 to 90 percent market share for products used in training large language models that power the likes of ChatGPT.

Google last week caused tremors in the industry when it was reported that Facebook-parent Meta Platforms Inc would employ Google AI chips in data centers, signaling new competition for Nvidia.

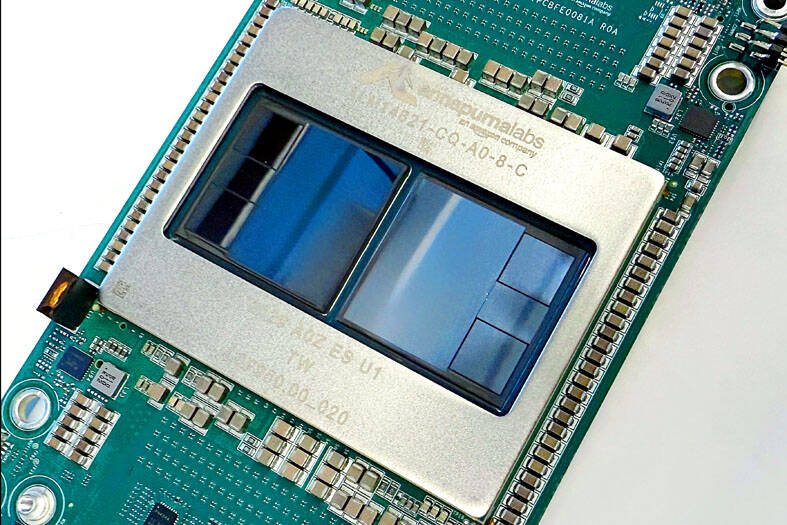

Photo: Amazon Web Services/Handout via Reuters

This followed the release last month of Google’s latest AI model, which was trained using the company’s in-house chips, not Nvidia’s.

Responding to Google’s successes, Nvidia wrote on social media that it was “delighted” by the competition, but added that Nvidia “is a generation ahead of the industry.”

AWS, which would make the technology available to its cloud computing clients, said its new chip is lower cost than rivals and delivers over four times the computing performance of its predecessor while using 40 percent less energy.

“Trainium3 offers the industry’s best price performance for large-scale AI training and inference,” AWS CEO Matt Garman said at a launch event in Las Vegas. Inference is the execution phase of AI, where the model stops scouring the Internet for training and starts performing tasks in real-world scenarios.

Energy consumption is one of the major concerns about the AI revolution, with major tech companies scaling back or pausing their net zero emissions commitments as they race to keep up on the technology.

AWS said its chip could reduce the cost of training and operating AI models by up to 50 percent compared with systems that use equivalent graphics processing units, mainly from Nvidia.

“Training cutting-edge models now requires infrastructure investments that only a handful of organizations can afford,” AWS said, positioning Trainium3 as a way to democratize access to high-powered AI computing.

AWS said several companies are already using the technology, including Anthropic PBC, maker of the Claude AI assistant and a competitor to ChatGPT-maker OpenAI.

AWS also announced that it is already developing Trainium4, which is expected to deliver at least three times the performance of Trainium3 for standard AI workloads.

The next-generation chip would support Nvidia’s technology, allowing it to work alongside that company’s servers and hardware.

Amazon’s in-house chip development reflects a broader trend among cloud service providers seeking to reduce dependence on external suppliers while offering customers more cost-effective alternatives for AI workloads.

Taiwan Semiconductor Manufacturing Co (TSMC, 台積電) last week recorded an increase in the number of shareholders to the highest in almost eight months, despite its share price falling 3.38 percent from the previous week, Taiwan Stock Exchange data released on Saturday showed. As of Friday, TSMC had 1.88 million shareholders, the most since the week of April 25 and an increase of 31,870 from the previous week, the data showed. The number of shareholders jumped despite a drop of NT$50 (US$1.59), or 3.38 percent, in TSMC’s share price from a week earlier to NT$1,430, as investors took profits from their earlier gains

In a high-security Shenzhen laboratory, Chinese scientists have built what Washington has spent years trying to prevent: a prototype of a machine capable of producing the cutting-edge semiconductor chips that power artificial intelligence (AI), smartphones and weapons central to Western military dominance, Reuters has learned. Completed early this year and undergoing testing, the prototype fills nearly an entire factory floor. It was built by a team of former engineers from Dutch semiconductor giant ASML who reverse-engineered the company’s extreme ultraviolet lithography (EUV) machines, according to two people with knowledge of the project. EUV machines sit at the heart of a technological Cold

CHINA RIVAL: The chips are positioned to compete with Nvidia’s Hopper and Blackwell products and would enable clusters connecting more than 100,000 chips Moore Threads Technology Co (摩爾線程) introduced a new generation of chips aimed at reducing artificial intelligence (AI) developers’ dependence on Nvidia Corp’s hardware, just weeks after pulling off one of the most successful Chinese initial public offerings (IPOs) in years. “These products will significantly enhance world-class computing speed and capabilities that all developers aspire to,” Moore Threads CEO Zhang Jianzhong (張建中), a former Nvidia executive, said on Saturday at a company event in Beijing. “We hope they can meet the needs of more developers in China so that you no longer need to wait for advanced foreign products.” Chinese chipmakers are in

AI TALENT: No financial details were released about the deal, in which top Groq executives, including its CEO, would join Nvidia to help advance the technology Nvidia Corp has agreed to a licensing deal with artificial intelligence (AI) start-up Groq, furthering its investments in companies connected to the AI boom and gaining the right to add a new type of technology to its products. The world’s largest publicly traded company has paid for the right to use Groq’s technology and is to integrate its chip design into future products. Some of the start-up’s executives are leaving to join Nvidia to help with that effort, the companies said. Groq would continue as an independent company with a new chief executive, it said on Wednesday in a post on its Web