In Mary Shelley’s novel Frankenstein; or, The Modern Prometheus, scientist Victor Frankenstein famously uses dead body parts to create a hyperintelligent “superhuman” monster that — driven mad by human cruelty and isolation — ultimately turns on its creator. Since its publication in 1818, Shelley’s story of scientific research gone wrong has come to be seen as a metaphor for the danger and folly of trying to endow machines with human-like intelligence.

Shelley’s tale has taken on new resonance with the rapid emergence of generative artificial intelligence (AI).

On March 22, the Future of Life Institute issued an open letter signed by hundreds of tech leaders, including Tesla CEO Elon Musk and Apple cofounder Steve Wozniak, calling for a six-month pause, or a government-imposed moratorium, in developing AI systems more powerful than OpenAI’s newly released ChatGPT-4.

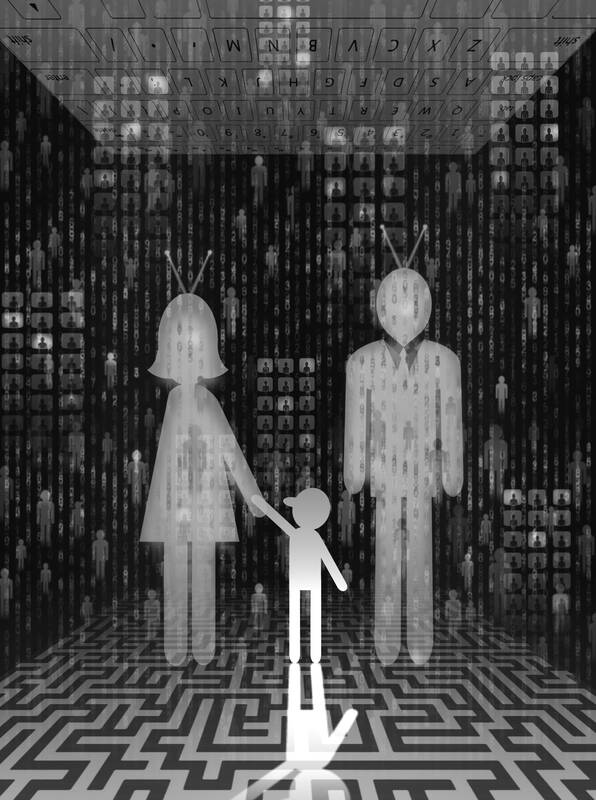

Illustration: Yusha

“AI systems with human-competitive intelligence can pose profound risks to society and humanity,” says the letter, which has more than 25,000 signatories.

The authors go on to speak of the “out-of-control” race “to develop and deploy ever more powerful digital minds that no one — not even their creators — can understand, predict, or reliably control.”

Musk, the world’s second-richest person, is in many respects the Victor Frankenstein of our time. The famously boastful South Africa-born billionaire has already tried to automate the entire process of driving, albeit with mixed results, claimed to invent a new mode of transportation with the Boring Co’s still hypothetical hyperloop project and declared his intention to “preserve the light of consciousness” by using his rocket company, SpaceX, to establish a colony on Mars.

Musk also happens to be a cofounder of OpenAI — he resigned from the company’s board in 2018 following a failed takeover attempt.

One of Musk’s pet projects is to combine AI and human consciousness. In August 2020, he showcased a pig with a computer chip implanted in its brain to demonstrate the so-called “brain-machine interface” developed by his tech start-up Neuralink. When Gertrude the pig ate or sniffed straw, a graph tracked its neural activity.

Musk said that this technology could be used to treat memory loss, anxiety, addiction and even blindness.

Months later, Neuralink released a video of a monkey playing a video game with its mind using an implanted device.

These stunts were accompanied by Musk’s usual braggadocio.

He said he hoped that Neuralink’s brain augmentation technology could usher in an era of “superhuman cognition” in which computer chips that optimize mental functions would be widely and cheaply available.

The procedure to implant them would be fully automated and minimally invasive, he said.

Every few years, as the technology improves, the chips could replaced with a new model.

However, this is all hypothetical: Neuralink is still struggling to keep its test monkeys alive.

While Musk tries to create cyborgs, humans could soon find themselves replaced by machines. In his 2005 book The Singularity Is Near, futurist Ray Kurzweil predicted that technological singularity — the point at which AI exceeds human intelligence — would occur by 2045. From then on, technological progress would be overtaken by “conscious robots” and increase exponentially, ushering in a better, post-human future.

Following the singularity, AI in the form of self-replicating nanorobots could spread across the universe until it becomes “saturated” with intelligent — albeit synthetic — life, Kurzweil said.

Echoing philosopher Immanuel Kant, Kurzweil referred to this process as the universe “waking up.”

Yet now that the singularity is almost upon us, Musk and company appear to be having second thoughts. The release of ChatGPT last year has seemingly caused panic among these former AI evangelists, causing them to shift from extolling the benefits of super-intelligent machines to figuring out how to stop them from going rogue.

Unlike Google’s search engine, which presents users with a list of links, ChatGPT can answer questions fluently and coherently.

Recently, a philosopher friend asked ChatGPT: “Is there a distinctively female style in moral philosophy?” and sent the answers to colleagues.

One found it “uncannily human.”

To be sure, she wrote: “It is a pretty trite essay, but at least it is clear, grammatical, and addresses the question, which makes it better than many of our students’ essays.”

In other words, ChatGPT passes the Turing test, exhibiting intelligent behavior that is indistinguishable from that of a human being. Already, the technology is turning out to be a nightmare for academic instructors, and its rapid evolution suggests that its widespread adoption could have disastrous consequences.

So, what is to be done? A recent policy brief by the Future of Life Institute, which is partly funded by Musk, suggests several possible ways to manage AI risks. Its proposals include mandating third-party auditing and certification, regulating access to computational power, creating “capable” regulatory agencies at the national level, establishing liability for harms caused by AI, increasing funding for safety research and developing standards for identifying and managing AI-generated content.

However, at a time of escalating geopolitical conflict and ideological polarization, preventing new AI technologies from being weaponized, much less reaching an agreement on global standards, seems highly unlikely.

Moreover, while the proposed moratorium is ostensibly meant to give industry leaders, researchers and policymakers time to comprehend the existential risks associated with this technology and to develop proper safety protocols, there is little reason to believe that today’s tech leaders can grasp the ethical implications of their creations.

In any case, it is unclear what a pause would mean in practice. Musk, for example, is reportedly already working on an AI start-up that would compete with OpenAI.

Are our contemporary Victor Frankensteins sincere about pausing generative AI, or are they merely jockeying for position?

Robert Skidelsky, a member of the British House of Lords, is professor emeritus of political economy at Warwick University.

Copyright: Project Syndicate

US President Donald Trump and Chinese President Xi Jinping (習近平) were born under the sign of Gemini. Geminis are known for their intelligence, creativity, adaptability and flexibility. It is unlikely, then, that the trade conflict between the US and China would escalate into a catastrophic collision. It is more probable that both sides would seek a way to de-escalate, paving the way for a Trump-Xi summit that allows the global economy some breathing room. Practically speaking, China and the US have vulnerabilities, and a prolonged trade war would be damaging for both. In the US, the electoral system means that public opinion

They did it again. For the whole world to see: an image of a Taiwan flag crushed by an industrial press, and the horrifying warning that “it’s closer than you think.” All with the seal of authenticity that only a reputable international media outlet can give. The Economist turned what looks like a pastiche of a poster for a grim horror movie into a truth everyone can digest, accept, and use to support exactly the opinion China wants you to have: It is over and done, Taiwan is doomed. Four years after inaccurately naming Taiwan the most dangerous place on

Wherever one looks, the United States is ceding ground to China. From foreign aid to foreign trade, and from reorganizations to organizational guidance, the Trump administration has embarked on a stunning effort to hobble itself in grappling with what his own secretary of state calls “the most potent and dangerous near-peer adversary this nation has ever confronted.” The problems start at the Department of State. Secretary of State Marco Rubio has asserted that “it’s not normal for the world to simply have a unipolar power” and that the world has returned to multipolarity, with “multi-great powers in different parts of the

President William Lai (賴清德) recently attended an event in Taipei marking the end of World War II in Europe, emphasizing in his speech: “Using force to invade another country is an unjust act and will ultimately fail.” In just a few words, he captured the core values of the postwar international order and reminded us again: History is not just for reflection, but serves as a warning for the present. From a broad historical perspective, his statement carries weight. For centuries, international relations operated under the law of the jungle — where the strong dominated and the weak were constrained. That