Artificial intelligence systems capable of feelings or self-awareness are at risk of being harmed if the technology is developed irresponsibly, according to an open letter signed by AI practitioners and thinkers including Sir Stephen Fry.

More than 100 experts have put forward five principles for conducting responsible research into AI consciousness, as rapid advances raise concerns that such systems could be considered sentient.

The principles include prioritizing research on understanding and assessing consciousness in AIs, in order to prevent “mistreatment and suffering.”

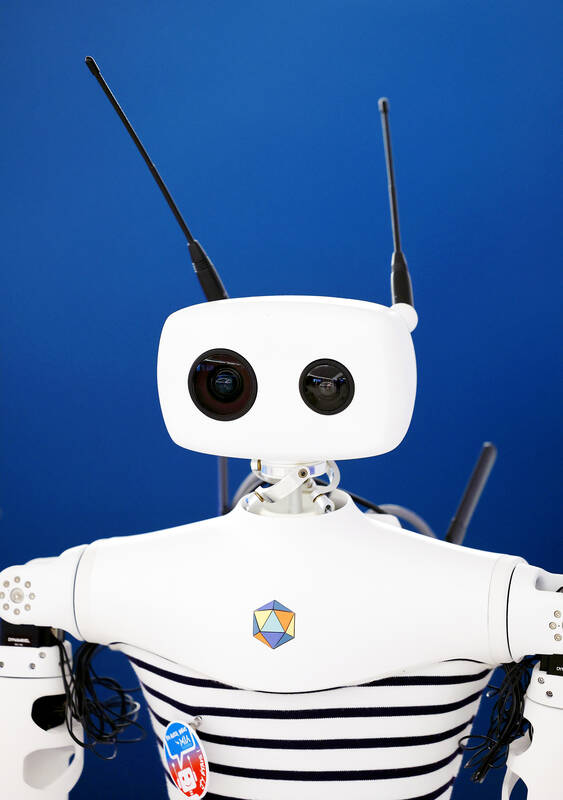

Photo: Photo: Bloomberg

The other principles are: setting constraints on developing conscious AI systems; taking a phased approach to developing such systems; sharing findings with the public; and refraining from making misleading or overconfident statements about creating conscious AI.

The letter’s signatories include academics such as Sir Anthony Finkelstein at the University of London and AI professionals at companies including Amazon and the advertising group WPP.

It has been published alongside a research paper that outlines the principles. The paper argues that conscious AI systems could be built in the near future — or at least ones that give the impression of being conscious.

Photo: EPA

“It may be the case that large numbers of conscious systems could be created and caused to suffer,” the researchers say, adding that if powerful AI systems were able to reproduce themselves it could lead to the creation of “large numbers of new beings deserving moral consideration.”

The paper, written by Oxford University’s Patrick Butlin and Theodoros Lappas of the Athens University of Economics and Business, adds that even companies not intending to create conscious systems will need guidelines in case of “inadvertently creating conscious entities.”

It acknowledges that there is widespread uncertainty and disagreement over defining consciousness in AI systems and whether it is even possible, but says it is an issue that “we must not ignore”.

Photo: AFP

Other questions raised by the paper focus on what to do with an AI system if it is defined as a “moral patient” — an entity that matters morally “in its own right, for its own sake.” In that scenario, it questions if destroying the AI would be comparable to killing an animal.

The paper, published in the Journal of Artificial Intelligence Research, also warned that a mistaken belief that AI systems are already conscious could lead to a waste of political energy as misguided efforts are made to promote their welfare.

The paper and letter were organized by Conscium, a research organization part-funded by WPP and co-founded by WPP’s chief AI officer, Daniel Hulme.

Last year a group of senior academics argued there was a “realistic possibility” that some AI systems will be conscious and “morally significant” by 2035.

In 2023, Sir Demis Hassabis, the head of Google’s AI program and a Nobel prize winner, said AI systems were “definitely” not sentient currently but could be in the future.

“Philosophers haven’t really settled on a definition of consciousness yet but if we mean sort of self-awareness, these kinds of things, I think there’s a possibility AI one day could be,” he said in an interview with US broadcaster CBS.

The primaries for this year’s nine-in-one local elections in November began early in this election cycle, starting last autumn. The local press has been full of tales of intrigue, betrayal, infighting and drama going back to the summer of 2024. This is not widely covered in the English-language press, and the nine-in-one elections are not well understood. The nine-in-one elections refer to the nine levels of local governments that go to the ballot, from the neighborhood and village borough chief level on up to the city mayor and county commissioner level. The main focus is on the 22 special municipality

The People’s Republic of China (PRC) invaded Vietnam in 1979, following a year of increasingly tense relations between the two states. Beijing viewed Vietnam’s close relations with Soviet Russia as a threat. One of the pretexts it used was the alleged mistreatment of the ethnic Chinese in Vietnam. Tension between the ethnic Chinese and governments in Vietnam had been ongoing for decades. The French used to play off the Vietnamese against the Chinese as a divide-and-rule strategy. The Saigon government in 1956 compelled all Vietnam-born Chinese to adopt Vietnamese citizenship. It also banned them from 11 trades they had previously

Jan. 12 to Jan. 18 At the start of an Indigenous heritage tour of Beitou District (北投) in Taipei, I was handed a sheet of paper titled Ritual Song for the Various Peoples of Tamsui (淡水各社祭祀歌). The lyrics were in Chinese with no literal meaning, accompanied by romanized pronunciation that sounded closer to Hoklo (commonly known as Taiwanese) than any Indigenous language. The translation explained that the song offered food and drink to one’s ancestors and wished for a bountiful harvest and deer hunting season. The program moved through sites related to the Ketagalan, a collective term for the

As devices from toys to cars get smarter, gadget makers are grappling with a shortage of memory needed for them to work. Dwindling supplies and soaring costs of Dynamic Random Access Memory (DRAM) that provides space for computers, smartphones and game consoles to run applications or multitask was a hot topic behind the scenes at the annual gadget extravaganza in Las Vegas. Once cheap and plentiful, DRAM — along with memory chips to simply store data — are in short supply because of the demand spikes from AI in everything from data centers to wearable devices. Samsung Electronics last week put out word