In the not-so-distant future, students will be able to graduate from high school without ever touching a book. Twenty years ago, they could graduate from high school without ever using a computer. In only a few decades, computer technology and the Internet have transformed the core principles of information, knowledge and education.

Indeed, today you can fit more books on the hard disk of your laptop computer than in a bookstore carrying 60,000 titles. The number of Web pages on the Internet is rumored to have exceeded 500 billion, enough to fill 10 modern aircraft carriers with the equivalent number of 500-page, 453g books.

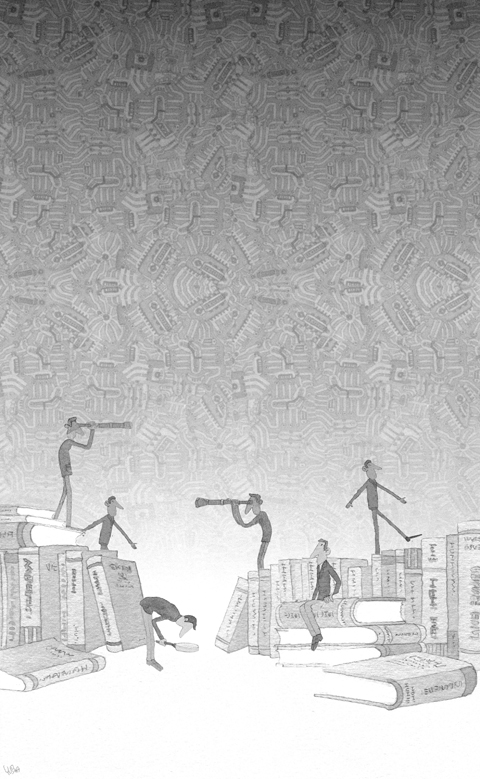

Such analogies help us visualize the immensity of the information explosion and ratify the concerns that come with it. Web search engines are the only mechanism with which to navigate this avalanche of information, so they should not be mistaken for an optional accessory, one of the buttons to play with, or a tool to locate the nearest pizza store. Search engines are the single most powerful distribution points of knowledge, wealth, and yes, misinformation.

When we talk about Web search, the first name that pops up is, of course, Google. It is not far-fetched to say that Google made the Internet what it is today. It shaped a new generation of people who are strikingly different from their parents. Baby boomers might be the best placed to appreciate this, since they experienced Rock ‘n’ Roll as kids and Google as parents.

Google’s design was based on statistical algorithms. But search technologies that are based on statistical algorithms cannot address the quality of information, simply because high-quality information is not always popular, and popular information is not always high-quality. You can collect statistics until the cows come home, but you cannot expect statistics to produce an effect beyond what they are good for.

In addition, statistics collection systems are backward-looking. They need time for people to make referrals and time to collect them. Therefore, new publications and dynamic pages that change their content frequently are already beyond the scope of the popularity methods, and searching this material is vulnerable to rudimentary techniques of manipulation.

For example, the inefficiencies of today’s search engines have created a new industry called Search Engine Optimization, which focuses on strategies to make Web pages rank high against the popularity criteria of Google-esque search engines. It is a billion-dollar industry. If you have enough money, your Web page can be ranked higher than many others that are more credible or higher quality. Since the emergence of Google, quality information has never been so vulnerable to the power of commercialism.

Information quality, molded in the shadow of Web search, will determine the future of mankind, but ensuring quality will require a revolutionary approach, a technological breakthrough beyond statistics. This revolution is underway, and it is called semantic technology.

The underlying idea behind semantic technology is to teach computers how the world operates. For example, when a computer encounters the word “bill,” it would know that “bill” has 15 different meanings in English. When the computer encounters the phrase “killed the bill,” it would deduce that “bill” can only be a proposed law submitted to a legislature, and that “kill” could mean only “stop.”

By contrast, “kill bill” would only be the title of the movie by that name. At the end, a series of deductions like these would handle entire sentences and paragraphs to yield an accurate text-meaning representation.

To achieve this level of dexterity in handling languages by computer algorithms, an ontology must be built. Ontology is neither a dictionary nor a thesaurus. It is a map of interconnected concepts and word senses that reflect relationships such as those that exist between the concepts of “bill” and “kill.”

Building an ontology encapsulating the world’s knowledge may be an immense task, requiring an effort comparable to compiling a large encyclopedia and the expertise to build it, but it is feasible. Several start-up companies around the world, like Hakia, Cognition Search and Lexxe, have taken on this challenge. The result of these efforts remains to be seen.

But how would a semantic search engine solve the information quality problem? The answer is simple: precision. Once computers can handle natural languages with semantic precision, high-quality information will not need to become popular before it reaches the end user, unlike what is required by Web search today.

Semantic technology promises other means of assuring quality, by detecting the richness and coherence of the concepts encountered in a given text. If the text includes a phrase like “Bush killed the last bill in the Senate,” does the rest of the text include coherent concepts? Or is this page a spam page that includes a bunch of popular single-liners wrapped with ads? Semantic technology can discern what it is.

Given humans’ limited reading speed (200 to 300 words per minute) and the enormous volume of available information, effective decision-making today calls for semantic technology in every aspect of knowledge refinement. We cannot afford a future in which knowledge is at the mercy of popularity and money.

Riza Berkan is a nuclear scientist with a specialization in artificial intelligence, fuzzy logic and information systems. He is the founder of Hakia.

COPYRIGHT: PROJECT SYNDICATE

Taiwan aims to elevate its strategic position in supply chains by becoming an artificial intelligence (AI) hub for Nvidia Corp, providing everything from advanced chips and components to servers, in an attempt to edge out its closest rival in the region, South Korea. Taiwan’s importance in the AI ecosystem was clearly reflected in three major announcements Nvidia made during this year’s Computex trade show in Taipei. First, the US company’s number of partners in Taiwan would surge to 122 this year, from 34 last year, according to a slide shown during CEO Jensen Huang’s (黃仁勳) keynote speech on Monday last week.

When China passed its “Anti-Secession” Law in 2005, much of the democratic world saw it as yet another sign of Beijing’s authoritarianism, its contempt for international law and its aggressive posture toward Taiwan. Rightly so — on the surface. However, this move, often dismissed as a uniquely Chinese form of legal intimidation, echoes a legal and historical precedent rooted not in authoritarian tradition, but in US constitutional history. The Chinese “Anti-Secession” Law, a domestic statute threatening the use of force should Taiwan formally declare independence, is widely interpreted as an emblem of the Chinese Communist Party’s disregard for international norms. Critics

Birth, aging, illness and death are inevitable parts of the human experience. Yet, living well does not necessarily mean dying well. For those who have a chronic illness or cancer, or are bedridden due to significant injuries or disabilities, the remainder of life can be a torment for themselves and a hardship for their caregivers. Even if they wish to end their life with dignity, they are not allowed to do so. Bih Liu-ing (畢柳鶯), former superintendent of Chung Shan Medical University Hospital, introduced the practice of Voluntary Stopping of Eating and Drinking as an alternative to assisted dying, which remains

President William Lai (賴清德) has rightly identified the Chinese Communist Party (CCP) as a hostile force; and yet, Taiwan’s response to domestic figures amplifying CCP propaganda remains largely insufficient. The Mainland Affairs Council (MAC) recently confirmed that more than 20 Taiwanese entertainers, including high-profile figures such as Ouyang Nana (歐陽娜娜), are under investigation for reposting comments and images supporting People’s Liberation Army (PLA) drills and parroting Beijing’s unification messaging. If found in contravention of the law, they may be fined between NT$100,000 and NT$500,000. That is not a deterrent. It is a symbolic tax on betrayal — perhaps even a way for